लेनोवो WA5480G3 24-बे हाई-डेंसिटी AI/कंप्यूट सर्वर डुअल 32-कोर सीपीयू और मल्टी-टियर NVMe स्टोरेज के साथ

- उत्पाद श्रेणियाँ:सर्वर

- पार्ट नंबर:Lenovo WA5480G3

- उपलब्धता:In Stock

- स्थिति:नया

- उत्पाद की विशेषताएँ:शिपिंग के लिए तैयार

- न्यूनतम आदेश:1 इकाई

- पहले की कीमत:$38,999.00

- आपकी कीमत: $35,715.00 आप बचाते हैं $3,284.00

- अब चैट करें ईमेल भेजें

निश्चिंत रहें। रिटर्न स्वीकार किए जाते हैं।

शिपिंग: अंतर्राष्ट्रीय शिपमेंट में कस्टम शुल्क शामिल हो सकते हैं। विवरण देखें

डिलिवरी: कस्टम क्लियरेंस के कारण अतिरिक्त समय लग सकता है। विवरण देखें

रिटर्न: 14 दिन। रिटर्न शिपिंग विक्रेता द्वारा भुगतान। विवरण देखें

नि:शुल्क शिपिंग। हम NET 30 खरीद आदेश स्वीकार करते हैं।

यदि आपको बड़ी मात्रा में Lenovo WA5480G3 चाहिए, तो WhatsApp: (+86) 151-0113-5020 पर कॉल करें या लाइव चैट में उद्धरण का अनुरोध करें।

Lenovo WA5480G3 24-Bay High-Density AI/Compute Server with Dual 32-Core CPUs & Multi-Tier NVMe Storage

Keywords

Lenovo WA5480G3, 24-bay server chassis, dual 6544Y CPUs, 32-core 3.6 GHz, 768 GB DDR5 memory, 3.2 TB NVMe drives, 400 GbE ConnectX-7, Lenovo AI server sale, buy WA5480G3 online

Description

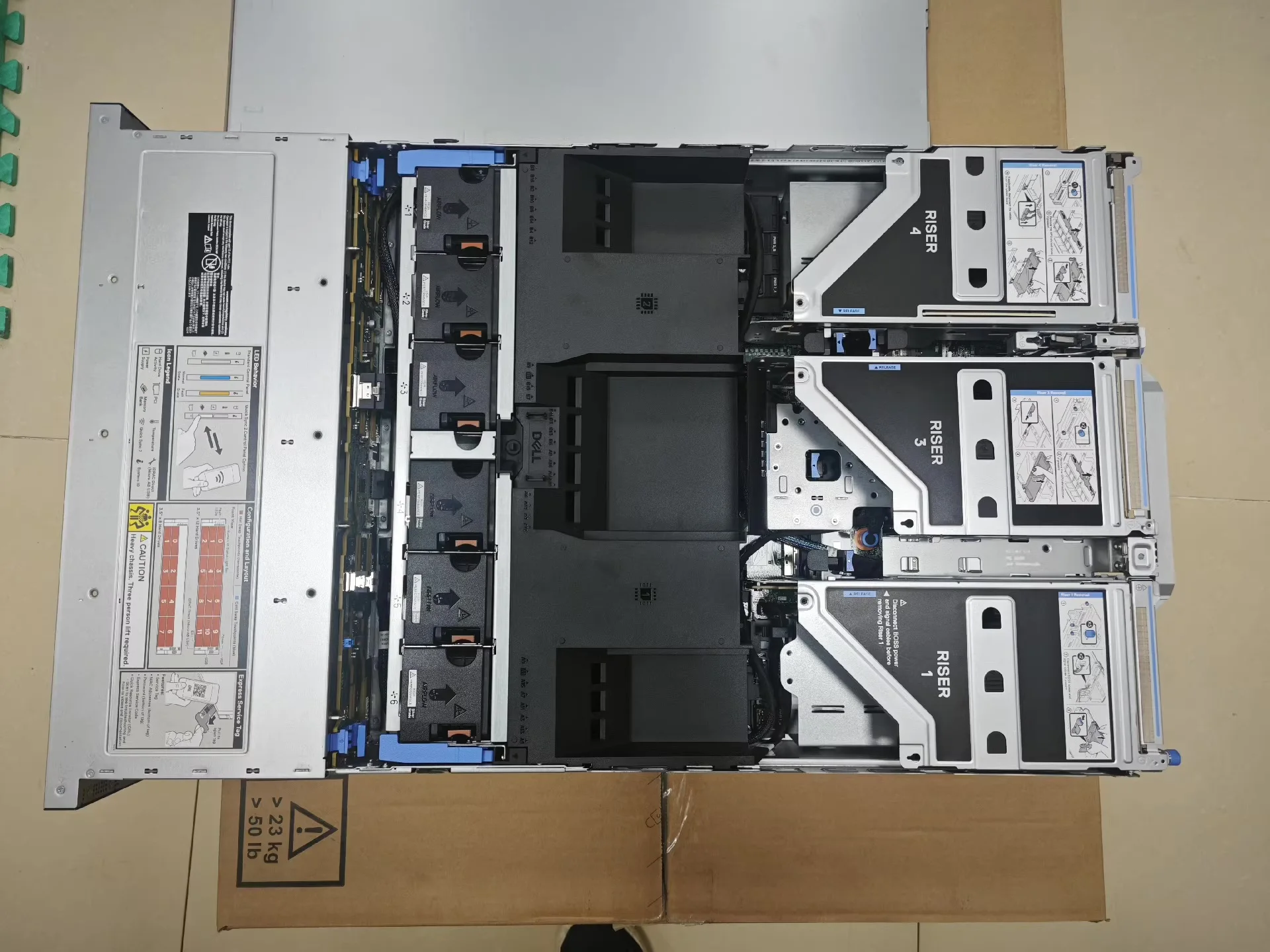

The Lenovo WA5480G3 server is built for demanding AI, HPC and data-intensive workloads, delivering a high-density, multi-tier storage and compute platform. This configuration features dual Intel Xeon Gold 6544Y+ processors (each 16 cores at 3.6 GHz base) to provide significant parallel processing power. With the “dual 6544Y CPUs” keyword, you’re acquiring a system designed for heavy compute throughput and accelerated inference or training workflows.

Memory is ample: the system includes 12 × 64 GB modules (768 GB total) of DDR5 ECC memory, providing high bandwidth and capacity for large datasets and concurrent tasks. The storage tier is particularly robust: 8 × 3.2 TB NVMe drives, 4 × 480 GB SSDs, 6 × 2.4 TB SSDs, and 2 × 960 GB M.2 drives — enabling a modern combination of ultra-fast I/O and large capacity storage. The “3.2TB NVMe drives” phrase highlights the ultra-fast tier present in the build.

Networking is well-specified with a dual-port 10/25 GbE OCP adapter (Broadcom 57412) and a quad-port 1 GbE adapter (Broadcom 5270), along with a 400 GbE ConnectX-7 card, supporting high-throughput and low-latency data movement across nodes. The chassis supports 24 drive bays and is set up with four 2700 W redundant power supplies to deliver fault-tolerant power for sustained workloads.

If you’re looking to “buy WA5480G3 online” for an AI server build or scalable compute node, this configuration offers a combination of high capacity, high performance and enterprise-grade reliability. The “Lenovo AI server sale” keyword underlines its relevance in the e-commerce space for server hardware procurement.

Key Features

- Dual Intel Xeon Gold 6544Y+ CPUs (16 cores each, 3.6 GHz base) for high compute density

- 768 GB DDR5 (12 × 64 GB) ECC memory for large-scale datasets and concurrent processing

- 24-bay chassis (front rack) with multi-tier storage: 8 × 3.2 TB NVMe + 4 × 480 GB SSD + 6 × 2.4 TB SSD + 2 × 960 GB M.2 for boot/metadata

- Dual-port 10/25 GbE OCP adapter (Broadcom 57412) plus quad-port 1 GbE adapter (Broadcom 5270) for networking flexibility

- 400 GbE ConnectX-7 adapter for ultra-high bandwidth interconnects

- Four 2700 W redundant hot-plug power supplies for enterprise reliability

- Rails included for rack mounting and serviceable design

- Optimized for AI / HPC workloads with multi-tier storage and high I/O paths

Configuration

| Qty | Component | Details |

|---|---|---|

| 1 | Server Model | Lenovo WA5480G3 (24-bay chassis) |

| 2 | Processors | Intel Xeon Gold 6544Y+ (16C each, 3.6 GHz base) |

| 12 | Memory Modules | 64 GB DDR5 ECC each (total 768 GB) |

| 8 | NVMe Drives | 3.2 TB NVMe each |

| 4 | SSD Drives | 480 GB SSD each |

| 6 | SSD Drives | 2.4 TB SSD each |

| 2 | M.2 Drives | 960 GB M.2 each (boot / metadata) |

| 1 | 10/25 GbE Adapter | Broadcom 57412 dual-port OCP |

| 1 | 1 GbE Adapter | Broadcom 5270 quad-port |

| 4 | Power Supplies | 2700 W each, redundant hot-plug |

| 1 | Rail Kit | Rack mounting rails included |

Compatibility

The Lenovo WA5480G3 supports dual-socket configurations and is designed for AI and heterogeneous compute deployments. The official specification states it can support up to 10 double-width GPUs and is designed for large memory and storage configurations.

The chassis supports high-density drive configurations including NVMe and mixed drive types, and the server supports PCIe Gen5 / OCP 3.0 network cards — aligning with the “400 GbE ConnectX-7” and “10/25 GbE” networking present in this build.

Usage Scenarios

This server is ideal for **AI training and inference clusters**, where large datasets, high memory bandwidth and fast storage paths are key. With 768 GB of DDR5 memory and an 8×3.2 TB NVMe tier, the system can handle massive model parameters and high-speed I/O.

It also fits **high-density compute nodes** in HPC environments, correlating with the “24-bay server chassis” design where you may combine NVMe storage, GPUs and high-speed networking into a single 4U rack unit.

In **enterprise scale infrastructure deployments** where you need to “buy WA5480G3 online” or leverage a “Lenovo AI server sale”, this configuration gives a comprehensive package: compute, memory, storage and connectivity all in one platform.

For **data-intensive or analytics workloads**, the tiered storage combining NVMe, SSDs and M.2 drives supports hot/cold data separation and high throughput across applications such as real-time analytics, caching layers or large-scale databases.

Frequently Asked Questions (FAQs)

1. Can the WA5480G3 in this configuration support 24 front-mounted drives?

Yes. Although this build uses 8 NVMe drives and other tiers, the server chassis is designed for high-bay configurations and supports up to 24 server-side drives, aligning with the “24-bay server chassis” description.

2. Is the dual Intel Xeon Gold 6544Y+ (16 cores each) sufficient for GPU-accelerated workloads?

Yes. While the WA5480G3 is often used with GPU configurations (up to 10 double-width accelerators), the dual 16-core CPUs provide ample host compute for feeding GPUs, managing I/O and orchestration tasks, especially in high-performance AI environments.

3. How is the multi-tier storage structured in this server?

This build includes 8 × 3.2 TB NVMe drives for ultra-fast I/O, 4 × 480 GB SSDs and 6 × 2.4 TB SSDs for mid-tier storage, and 2 × 960 GB M.2 drives for OS/metadata. This combination ensures performance and capacity across hot, warm and cold storage tiers.

4. What network bandwidth does this configuration offer?

The system uses a Broadcom 57412 dual-port 10/25 GbE OCP adapter and a Broadcom 5270 quad-port 1 GbE adapter, along with a 400 GbE ConnectX-7 card, enabling very high throughput for data movement, cluster interconnect or AI-distributed workloads.

संबंधित उत्पाद

-

एंटरप्राइज़ वर्कलोड के लिए टेस्ला एल2 जीपीयू के सा... - पार्ट नंबर: Inspur NF5280M6...

- उपलब्धता:In Stock

- स्थिति:नया

- पहले की कीमत:$13,999.00

- आपकी कीमत: $11,099.00

- आप बचाते हैं $2,900.00

- अब चैट करें ईमेल भेजें

-

इंसपुर एनएफ5466एम6 डुअल इंटेल झियोन 4314 एंटरप्राइ... - पार्ट नंबर: Inspur NF5466M6...

- उपलब्धता:In Stock

- स्थिति:नया

- पहले की कीमत:$23,499.00

- आपकी कीमत: $19,750.00

- आप बचाते हैं $3,749.00

- अब चैट करें ईमेल भेजें

-

Dell PowerEdge R760xs - डुअल ज़ीऑन सिल्वर 4410Y एं... - पार्ट नंबर: Dell PowerEdge R760x...

- उपलब्धता:In Stock

- स्थिति:नया

- पहले की कीमत:$9,999.00

- आपकी कीमत: $7,699.00

- आप बचाते हैं $2,300.00

- अब चैट करें ईमेल भेजें

-

Dell PowerEdge R760xs - ज़ीऑन गोल्ड 6507P परफॉर्मे... - पार्ट नंबर: Dell PowerEdge R760x...

- उपलब्धता:In Stock

- स्थिति:नया

- पहले की कीमत:$9,999.00

- आपकी कीमत: $8,808.00

- आप बचाते हैं $1,191.00

- अब चैट करें ईमेल भेजें

-

Dell PowerEdge R660 1U रैक सर्वर - डुअल Xeon गोल्ड... - पार्ट नंबर: Dell PowerEdge R660...

- उपलब्धता:In Stock

- स्थिति:नया

- पहले की कीमत:$31,299.00

- आपकी कीमत: $25,736.00

- आप बचाते हैं $5,563.00

- अब चैट करें ईमेल भेजें

-

HPE ProLiant DL380 Gen11 2U रैक सर्वर | डुअल इंटेल... - पार्ट नंबर: HPE ProLiant DL380...

- उपलब्धता:In Stock

- स्थिति:नया

- पहले की कीमत:$24,899.00

- आपकी कीमत: $22,858.00

- आप बचाते हैं $2,041.00

- अब चैट करें ईमेल भेजें

-

इंसपुर एनएफ8480एम5 4यू एंटरप्राइज स्टोरेज सर्वर - ... - पार्ट नंबर: Inspur NF8480M5...

- उपलब्धता:In Stock

- स्थिति:नया

- पहले की कीमत:$24,999.00

- आपकी कीमत: $22,499.00

- आप बचाते हैं $2,500.00

- अब चैट करें ईमेल भेजें

-

लेनोवो थिंकसिस्टम SR850 V3 हाई-परफॉर्मेंस 4-CPU सर... - पार्ट नंबर: Lenovo ThinkSystem S...

- उपलब्धता:In Stock

- स्थिति:नया

- पहले की कीमत:$45,799.00

- आपकी कीमत: $38,499.00

- आप बचाते हैं $7,300.00

- अब चैट करें ईमेल भेजें